Google announced its new OS for virtual and augmented reality devices, called AndroidXR last week. At the unveiling they demoed the forthcoming headset from Samsung, which looks an awful lot like the Apple Vision Pro. No surprise there, as this long-ago teased product was delayed once Samsung got a look at Apple’s industry leading design. Will the VR headset market play out like the smartphone market did? Read on…

First things first. When Apple announced the Vision Pro, the thing that stood out to me was the user interface design. Apple is really good at this. Better than anyone else in the tech industry. They popularized the mouse and graphical user interface, the pinch and zoom touchscreen on smartphones, and so much more. The breakthrough invention of the Vision Pro was “look and pinch.”

Using sophisticated eye tracking, the headset can tell what you’re looking at with amazing accuracy. And with cameras on the outside of the headset, when you pinch your fingers together, whatever you’re looking at is activated. It’s the most natural computer interface yet. You just look at something, make a tiny finger gesture, and you’re interacting with a virtual computing environment.

As I predicted in June 2023, Google and Samsung copied this feature, which will make it an industry standard. Meta screwed up by not including eye tracking in the Quest 3, something I am sure they will rectify in future versions.

But Google’s Android XR features something even better than Apple’s “look and pinch” interface. They’re going all in with Gemini AI as the primary method for input. And yeah, I predicted this one too. Speaking to a computer is the most natural interface you can find. Combined with eye tracking and hand sensing, all of a sudden we are communicating with a computer just the way we do with a human.

The emergence of large language model AI has enabled this seemingly overnight. A few years ago I called your attention to Whisper AI, an offshoot project from OpenAI (the makers of ChatGPT. It could read any text and turn it into natural voice. If it can create voice from text, it can do the reverse, taking your voice and turning it into text. Add that capability to an LLM, and Voila! You have a computer interface.

The striking thing is how fast this is all happening. Within two years we went from an obscure online demo of an AI reading text, to an entire XR operating system that uses natural spoken language as an interface.

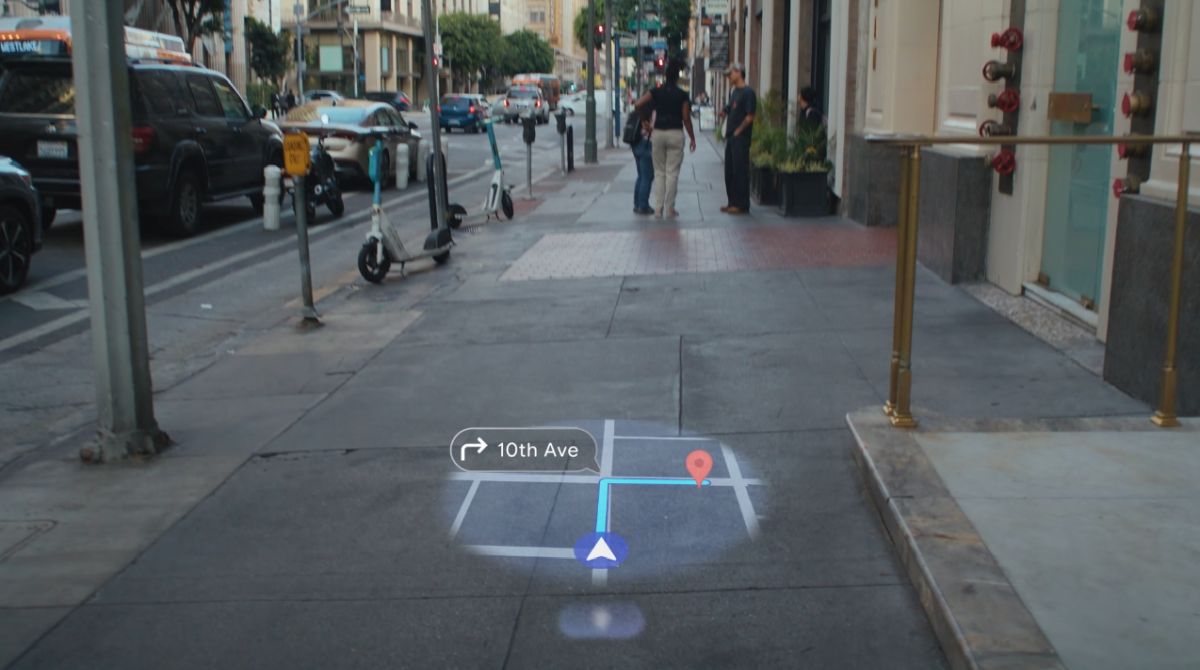

But it’s not just voice that’s integrated with Gemini AI. It’s integrated with the forward facing cameras. That means that the AI can “see” what’s happening in the “real world” and offer contextually relevant information, either via a visual display or by voice.

Along with the AndroidXR announcement, Samsung unveiled their forthcoming mixed reality headset, code named Project Moohan.

This first real VR headset from Samsung will be the first product to ship with AndroidXR. It’s no surprise that Google and Samsung have partnered up in an attempt to compete with Apple and Meta. And as you can see from the phone, Samsung took the ski googles design of the Vision Pro as the inspiration, just as they took they copied the look of the iPhone for their first touchscreen smartphones. And that’s OK, consistency of design and function will help the industry scale.

While no date next year has been announced, the limited nature of the demos suggest it will be later in the year. And no price is coming either, but I expect it will be in the $1500 to $2K range. Where the Vision Pro has a full M-class system on chip like those powering the Macbook Pro line, the Moohan will feature a mobile chipset from Qualcomm. (There is no official announcement as to whether it’s the XR2 that most other headsets useor something else yet.)

Also shown at the keynote, which garnered a lot more media excitement, was a pair of smartglasses that snuck into the demo. Google killed it’s “Glass” product line in 2023, but kept the research and development going in their Android product line. After witnessing the success of Meta’s Ray Bans, they pivoted that entire project to be more ecosystem based. They’ll be looking for for companies to manufacturer smartglasses on AndroidXR.

The integration of AI and smartglasses stands to be a very powerful combination. One of the challenges with using AI is prompting. But give it eyes, and now you can just look at something and ask it a question. Part of the media ndemo prompted users to look at a book and ask Gemini for information about it. It recognized the cover and read off a litany of details.

That last part is due to the integration of Google’s Project Astra AI universal agent as part of the AndroidXR platform. It’s still a research project, but it’s expanding into tests on smartphones so it can be ready when AR glasses hit the market in 2026 or beyond.

AI Agents promise to be our personal assistants, taking on and automating tasks that are tedious or repetitive. It will remember things based on the context or where you are and what you’re doing. Going to the gym? It will remember your locker combo. Walking by a florist on your anniversary, it will remind you to pick up a bouquet.

Smartglasses integrated with AI are probably going to be the next big computing device. They’re small, lightweight, fashionable, and could potentially replace the smartphone. The AI-powered audio integration removes the requirement to have an amazing, wide field of view display – a battery draining technology that is still years away.

With AI smartglasses, the visual display becomes a secondary means of delivering information. You might see arrows popup in your vision when it’s time to make a turn, or a list of things to select from for more information. The main computing interface will be voice. The Android XR demo showcased this clearly. The graphics on the glasses demo were decidedly low impact.

Meta has a multi-year head start on the smartglasses market. Google said it might be 2026 or later before we see AndroidXR smartglasses. And Apple has not even talked about glasses yet, but most people assume they’re working on them. Will Google and Samsung being in the market pressure Apple to accelerate? It certainly seems like Meta’s grip on the VR market pushed Apple to release the Vision Pro before it was ready for prime time.

If anyone thought that virtual reality was dead, this announcement should have them guessing again. The three biggest tech companies on the planet are all now competing for dominance in the VR space. Meta owns the VR gaming market and have announced that they’re abstracting their Horizon OS operating system into a platform third parties can develop on. Earlier this year they announced that Microsoft, Lenovo and Asus had signed on to develop headsets on Horizon.

Zuckerberg made a big deal out of saying Horizon OS would be the “open alternative” to Apple’s closed ecosystem. There’s been much debate to what “open” means to Meta. Earlier this month it was reported by The Information that Meta rejected using AndroidXR for the Quest. Horizon is built on an open source version of Android, which doesn’t give Meta access to the millions of Android Apps that run on smartphones.

Now, with AndroidXR on the market, it’s hard to see Meta being able to compete in the OS space. With Google’s experience building developer tools, and an existing app ecosystem, Android XR is going to be more trusted by companies jumping into the market.

Which might leave Meta’s Horizon as the gaming platform of the future, Android as the common, generic XR OS, and Apple’s VisionOS as the premium product that gobbles up all the profits, much like they do with smartphones and laptops.

It’s going to be an interested few years to see how this all shakes out. I have no idea what this means for LBE, other than more consumers will have more choices, and more developers will be coming on board to create experiences. The market for XR will continue to grow, albeit way more slowly than anyone imagined. The wildcard is AI smartglasses, which really isn’t XR unless you really stretch the definition. We could see those as ubiquitous devices within 2-3 years.